The creative and ill-structured nature of graphic design tasks, as exemplified in computer graphics design settings, creates difficulty in terms of assessment validity and reliability (Bartholomew, 2017; Pollitt, 2004; 2012; Neumann, 1988). Further, best practices in teaching and assessment, such as providing formative feedback to students, are hampered by issues around scalability and efficiency (Carless, Salter, Yang, & Lam, 2011). Finally, increasingly validated indications that good design(s) may be a function of a group consensus rather than the opinions of a few extensively trained individuals (e.g., teachers; Neumann, 1988), have all led to an increased emphasis on researching new approaches to improving graphic design education (Utaberta et al., 2013).

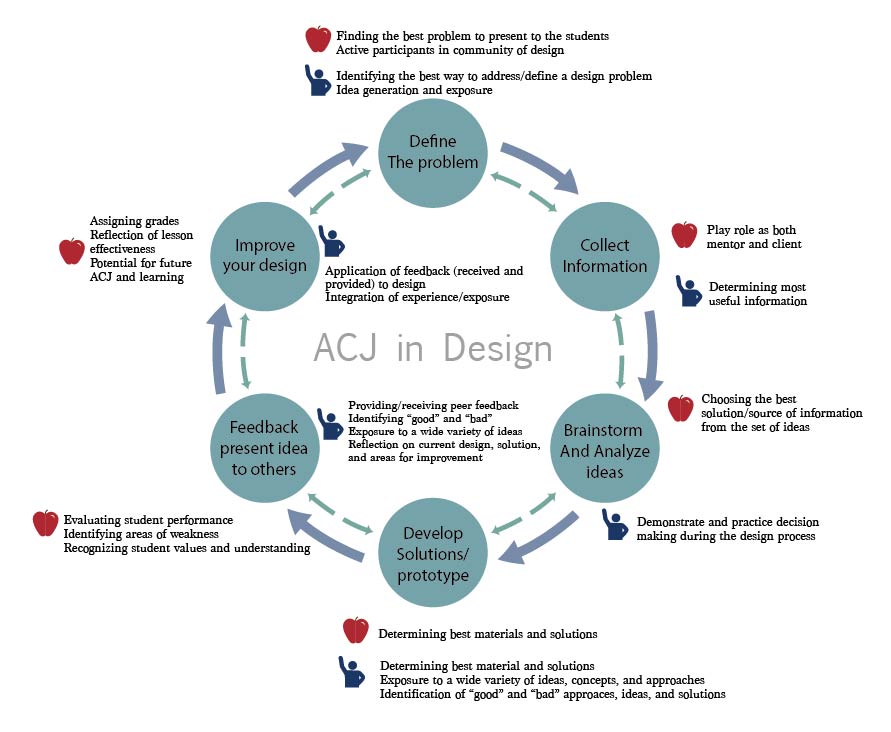

Adaptive comparative judgment (ACJ), a relatively new assessment approach designed for assessing solutions to open-ended problems (Pollitt, 2012), has demonstrated great potential for improving reliability in the evaluation of student design work (Bartholomew, Hartell, & Strimel, 2017; Seery, Delahunty, Canty, & Buckley, 2017). However, this approach has traditionally been used in engineering and technology design settings and has not been used widely in graphic design classrooms (Bartholomew & Yoshikawa, 2018; Newhouse, 2014). ACJ differs from traditional assessment approaches whereas assessors make holistic comparative judgments by contrasting two design artifacts and picking the better of the two rather than assessing student work by scoring a list of criteria, or traits, using a rubric. Research has shown that a decision between two items is significantly more reliable for humans to make than a subjective decision based on the relative quality of any given artifact (Pollitt, 2004; 2012).

In addition to improving reliability in assessment, ACJ has shown promise in formative settings for student learning and achievement (Bartholomew, Strimel, & Yoshikawa, 2018; Seery & Canty, 2017) and has specifically demonstrated the ability to expedite and scale-up the formative assessment and feedback process (Bartholomew, Garcia-Bravo, Strimel, & Zhang, 2018). Specifically, as students act as judges in ACJ sessions—viewing pairs of peer work and identifying which item is better in the ACJ interface—their own learning, understanding, and experience improves (Bartholomew & Yoshikawa, 2018; Bartholomew, Strimel, & Garcia-Bravo, 2018; Bartholomew, Strimel, & Yoshikawa, 2018). Further, utilizing students in ACJ sessions of peer work exponentially expedites the feedback process as students can quickly receive a host of feedback from peers collected through ACJ.

Despite the findings related to reliability, student learning, and the scaling of formative feedback we do not know when ACJ is most impactful. Research in summative (Seery & Canty, 2017), and formative (Bartholomew, Strimel, & Yoshikawa, 2018) settings—where students have done ACJ in the middle of an assignment—has shown promise. Research into utilizing ACJ prior to an assignment (Bartholomew & Garcia-Bravo, 2018) has also shown promise for assisting students. Specifically, work done last year in CGT 118 suggests that infusing the curriculum with ACJ experiences results in significantly improved student learning, enjoyment, and engagement (Bartholomew & Garcia-Bravo, 2018). However, a clear pathway for when a student should engage in ACJ (i.e., before an assignment, in the middle of an assignment, at the conclusion.) to most positively impact their grade is not defined. At this point in time, we don’t know if using ACJ prior, in the middle, or after an assignment will be most beneficial for their learning—we know ACJ is helpful for student learning but we are not yet sure when to incorporate it into the learning progression.

This project, funded through SEED funding from the Future Work and Learning Research Impact Area of the Polytechnic Institute, leverages Dr. Bartholomew’s expertise and experience in ACJ, design assessment, and research projects; Co-PI Dr. Esteban Garcia-Bravo is actively contributing expertise as the instructor of CGT 118 with multiple degrees in graphic design; lastly, a graduate research assistant is assisting in all the logistical preparations of the ACJ accounts, items, data collection, and analysis. As a first step, student work from the first assignment has been assessed and analyzed to ensure comparability between sections. Following comparability testing, section A students used prior year’s work to conduct an ACJ session before they begin working on each of their assigned design projects. Section B students conducted an ACJ session at the midpoint of each project with their own work and that of their peers. Section C students conducted an ACJ session at the end of each project with their own work and their peers’ work.

At the conclusion of the course, all student work, from each section, will be gathered into a final ACJ session conducted by the instructors. The relationships, if any exist, between student participation in ACJ at varying points during the design projects and their final achievement (as measured through their final rank in the concluding ACJ session and the grades they receive through traditional rubric-based grading approaches) will be investigated towards answering the identified research question.

Additional information:

Future Work and Learning is one of the college's five research impact areas. Future Work and Learning research focuses on exploring the intersection between learning and work to develop and apply innovative approaches to education and workforce training that empower people to fulfill active roles in society through participation, engagement and entrepreneurship.